In the previous post I tried emulating higher precision by using two 10-bit precision floats, but couldn’t get it working.

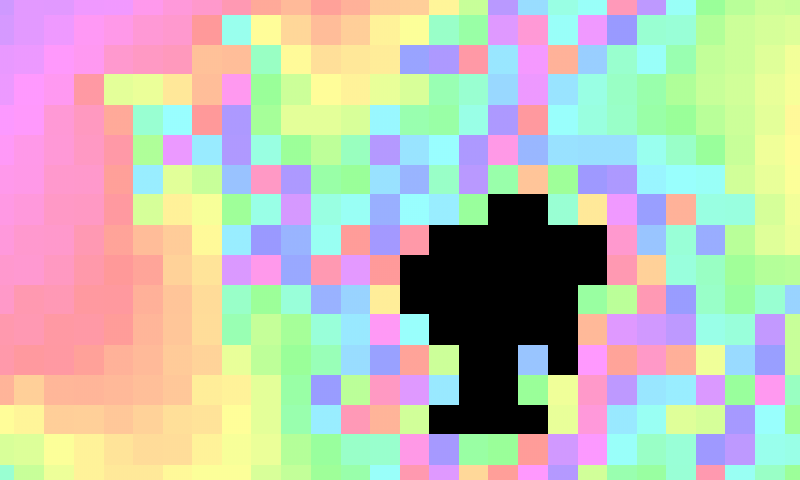

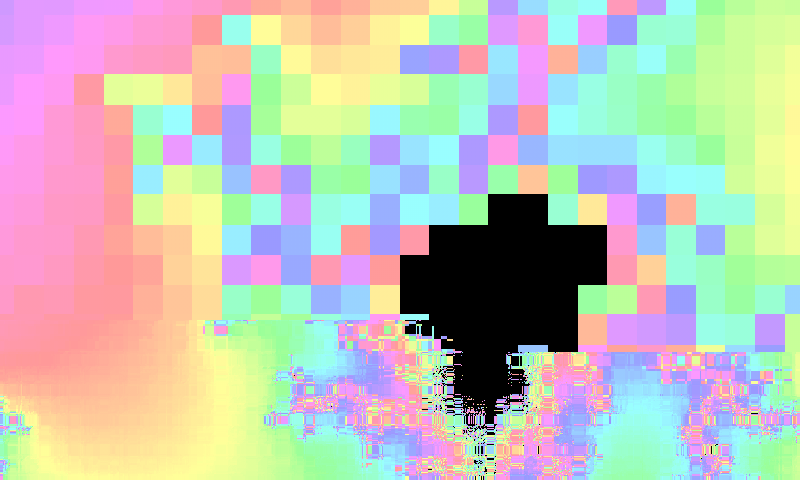

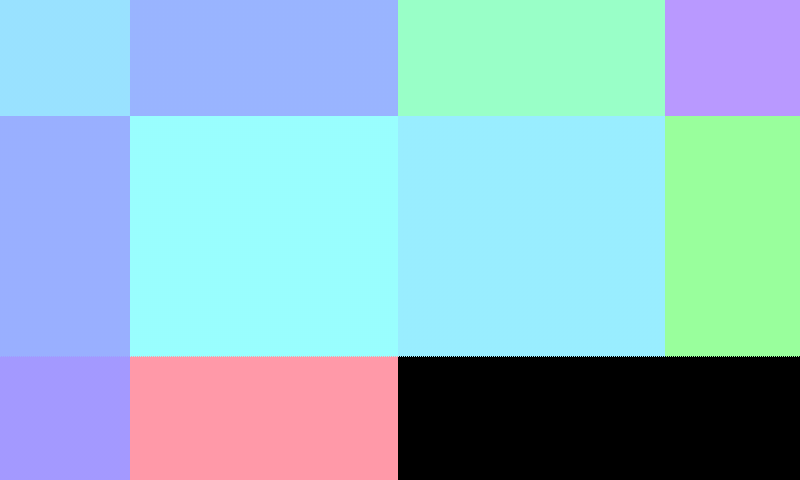

As you can see in the lower part of the screenshots, the precision did increase but not by much. The upper part of the image remains blocky since the shader runs out of memory on my device and only updates part of the image. That’s because I revved up the number of iterations to 1024, but the final app should have that ability so this is another problem that needs to be solved. I also changed the color of the generated image to make the difference more apparent.

Generating a geometry that has one vertex per pixel

Let’s do the calculations in the vertex shader instead of the fragment shader. OpenGL ES guarantees high precision floats in vertex shaders but not in fragment shaders, so generating the fractal there should greatly increase the resolution. Vertex shaders are executed once per polygon vertex and not per screen pixel, so we need to create a geometry that contains exactly one vertex per pixel of the screen and fill the screen with it.

public class Geometry {

private ShortBuffer indices;

private FloatBuffer buffer;

...

}

private Geometry generatePixelGeometry(int w, int h) {

ShortBuffer indices = ShortBuffer.allocate(w*h);

FloatBuffer buffer = FloatBuffer.allocate(3*w*h);

for(int i = 0; i < h; i++) {

for(int j = 0; j < w; j++) {

buffer.put(-1 + (2*j + 1)/(float)w);

buffer.put(-1 + (2*i + 1)/(float)h);

buffer.put(0);

indices.put((short)(i*w+j));

}

}

buffer.flip();

indices.flip();

return new Geometry(indices, buffer);

}

This will generate a geometry that contains one vertex in the middle of every pixel on a screen of resolution w x h. OpenGL ES 2 uses short indexing and doesn’t support int indexing for vertices. That’s why we use a ShortBuffer for the indices buffer. This also means that we have to split up our Geometry into smaller pieces. The max value for a short is 32767. The best option is to generate one single geometry that has fewer vertices than that and reuse it several times across the screen using multiple draw calls. Either way we have to make sure that the final scene has one vertex in every pixel of the screen.

The vertex shader

attribute vec3 position;

uniform mat4 modelViewMatrix;

uniform float MAX_ITER;

uniform vec2 scale;

uniform vec2 offset;

varying vec4 rgba;

vec4 color(float value, float radius, float max);

vec2 iter(vec2 z, vec2 c)

{

// Complex number equivalent of z*z + c

return vec2(z.x*z.x - z.y*z.y, 2.0*z.x*z.y) + c;

}

void main(void)

{

vec4 posit = modelViewMatrix * vec4(position, 1.);

vec2 c = 2.*(scale*posit.xy + offset);

vec2 z = vec2(0.);

int i;

int max = int(MAX_ITER);

float radius = 0.;

for( i=0; i<max; i++ ) { radius = z.x*z.x + z.y*z.y; if( radius > 16.) break;

z = iter(z,c);

}

float value = (i == max ? 0.0 : float(i));

rgba = color(value, radius, MAX_ITER);

gl_Position = posit;

}

The vertex shader has to take the modelViewMatrix into account since we are splitting the geometry into multiple pieces and placing it at different positions in a scene. This had to be done because a single big geometry would be too big for short.

The fragment shader

precision mediump float;

varying vec4 rgba;

void main()

{

gl_FragColor = rgba;

}

The fragment shader is as simple as it can be. It just receives the rgba vector from the vertex shader and draws it to the frame buffer.

Results

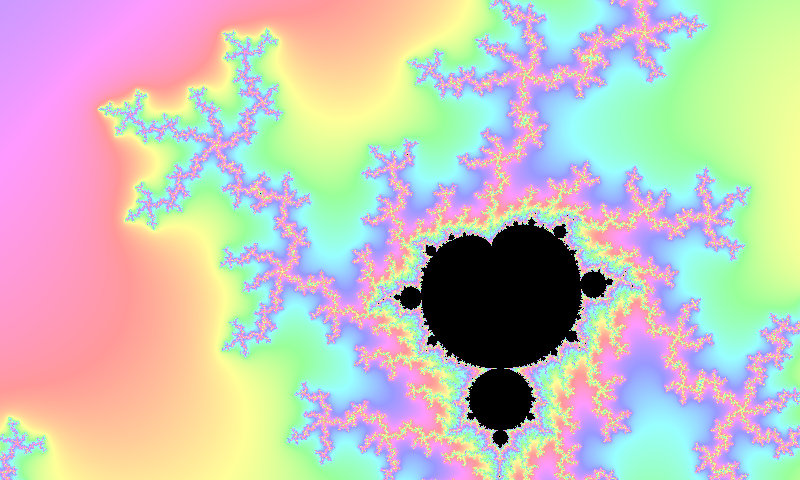

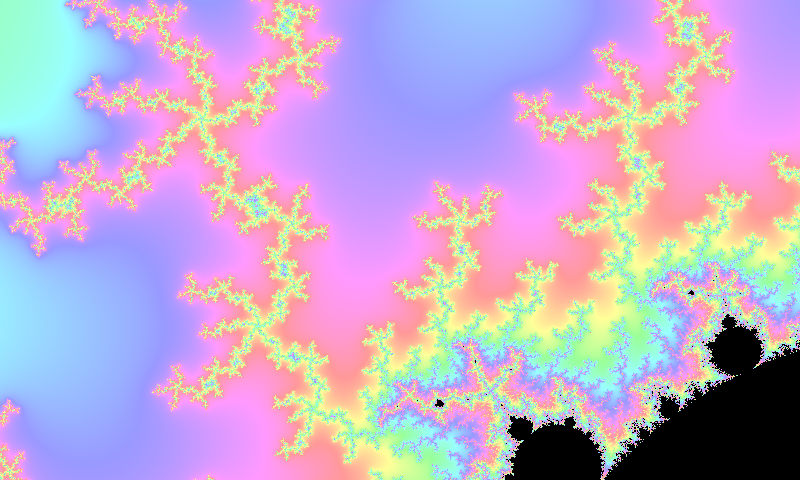

That’s much better!

The fractal renders slightly slower than with the lower precision shader but still really quickly and does 1024 iterations without running out of memory . Let’s see how much we can zoom in now.

The pixels start filling out the screen at a scale of 0.0002, a zoom level of 5000x. Switching to the vertex mode instead shows an amazing difference. On my device highp floats in the vertex shader are normal 32-bit floats and have a precision of 23 bits, while the mediump 16-bit floats in the fragment shader only have a 10-bit precision. That means that the precision is more then doubled. Every pixel in fragment mode becomes in vertex mode bigger than the entire shader was in fragment mode. In fact pixels became apparent first on a scale of 2*10^(-6). See the results for your self in the google play: GPU Mandelbrot.

In the next post I’ll give double emulation one more try.

Pingback: Emulated 64-bit floats in OpenGL ES shader | Infinite Worlds